Summary

Temi (pronounced “Timmy”) is a robot designed primarily as a personal assistant and companion. Created by Robotemi, this smart robot is equipped with advanced artificial intelligence, voice recognition, and autonomous navigation capabilities. Temi can move around freely, interact with users through voice commands, and perform tasks such as answering questions, playing music, making video calls, and controlling smart home devices. Its 10.1-inch touchscreen allows for an intuitive interface, making it ideal for both home and business use, especially in settings like healthcare, hospitality, and customer service. Temi also features facial recognition and can follow users around, creating a personalized, hands-free experience. Its sleek, human-friendly design emphasizes accessibility and ease of use, making it a versatile tool for various environments.

Implementation in Healthcare

Temi is a highly adaptable platform for custom development. In our case, we’ve integrated a smell sensor (developed by Smart Nanotubes) with the robot, enabling it to gather scent data as it navigates different areas. The sensor captures data across 64 smell channels, along with temperature and humidity readings, which can be used to identify specific odors in real-time.

By combining Temi’s mobility with this advanced smell detection, the robot can monitor environments such as assisted living homes or hospital floors, identifying unexpected gases or abnormalities. In assisted living settings, for example, Temi can track bodily waste functions and alert care staff to potential issues. Additionally, the real-time temperature and humidity monitoring feature offers valuable insights in temperature-sensitive environments, like hospital floors, allowing for timely alerts when conditions deviate from the norm.

Datasets/Models

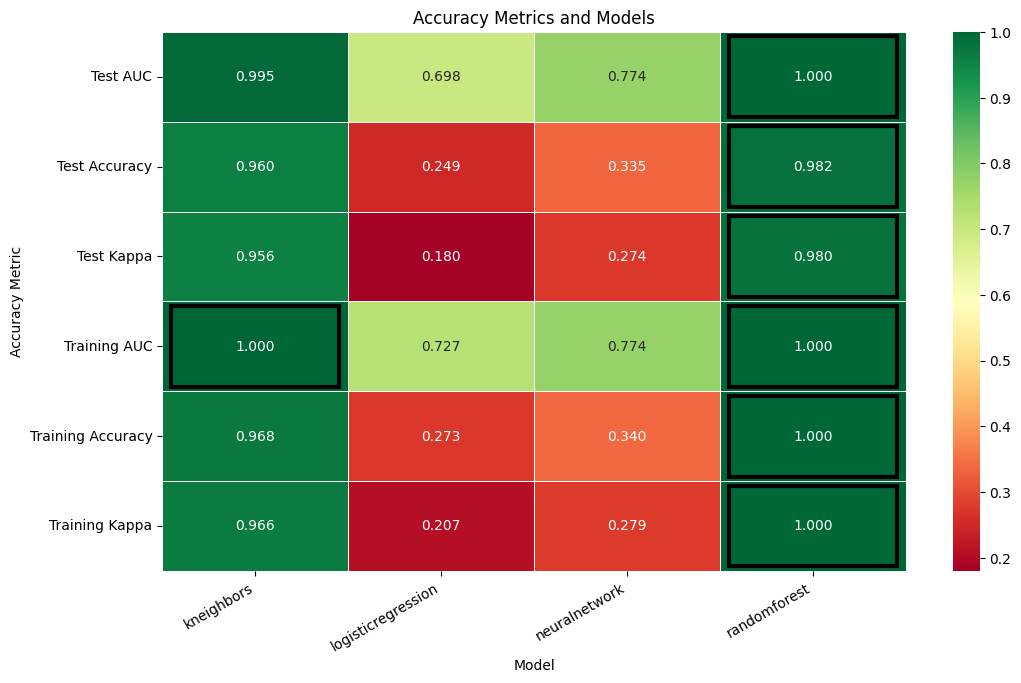

In the meantime, we have included a subset of preliminary test results below. By analyzing scent data from various locations around our office and predicting the robot’s position based on this information, we achieved the following outcomes.

Access

Email ai@uky.edu for more information.

Ownership

This platform is currently in beta testing, with plans for full deployment in the near future.

Resources

LLM Factory is being utilized as the natural language understanding (Llama 3) and task planning (DeepSeek R1) provider for Temi.

Developers Evan Damron and Sam Armstrong are working on this project.

- 20% of Sam Armstrong’s FTE for the life of the Supplement (1 year)

- 20% of Evan Damron’s FTE for the life of the Supplement (1 year)